We will learn how to run a model from HuggingFace Hub locally on our machine, and ask it questions just like you would with ChatGPT. If you are worried about privacy issues with models hosted by someone else, this is a good place to start! Hosting your own model on your machine will give you all the control.

The tools we will need are as follows:

- Python. We need Python 3.

- Langchain. Langchain gives us libraries in Javascript and Python to interact with the LLMs more easily.

First we load in HuggingFacePipeline from Langchain, as well as AutoTokenizer, pipeline, and AutoModelForSeq2SeqLM.

Pipelines make it easy for us to instantiate and interact with the model locally. AutoTokenizer is for tokenizing input text, in this case it's our query. AutoModelForSeq2SeqLM is needed for models with encoder-decoder architecture, like T5. You can read more about the encoder decoder architecture here.

For this example, we are going with Flan T5 model from Google. We specify the model, instantitate our tokenizer and our model. We specify that this is a "text2text-generation" task. With the help of HuggingFacePipeline, we can directly query the model.

from langchain.llms import HuggingFacePipeline

from transformers import AutoTokenizer, pipeline, AutoModelForSeq2SeqLM

model_id = "google/flan-t5-large"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForSeq2SeqLM.from_pretrained(model_id, load_in_8_bit=True)

pipe = pipeline("text2text-generation", model=model, tokenizer=tokenizer, max_length=100)

local_llm = HuggingFacePipeline(pipeline=pipe)

print(local_llm.run("Who won world cup in 1998?"))

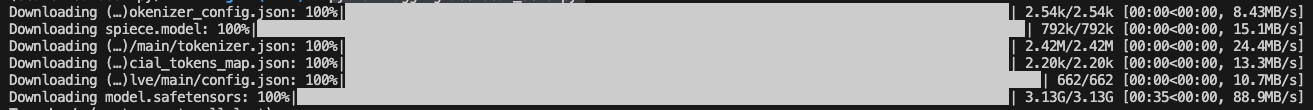

If you run the file python huggingfacelocal_demo.py, you will see we first have to download the model locally.

Then we get our answer. The result isn't perfect. Just like the previous tutorial with running our model on HuggingFace Hub, you can play with other models and feed them more use case specific questions to get better results.